List items

Items from the current list are shown below.

Gecko

All items from January 2024

25 Jan 2024 : Day 149 #

It's my last dev diary before taking a 14 day break from gecko development today. I'm not convinced that I've made the right decision: there's a part of me that thinks I should forge on ahead and just make all of the things fit into the time I have available. But there's also the realist in me that says something has to give.

So there will be no dev diary tomorrow or until the 8th February, at which point I'll start up again. Ostensibly the reason is so that I can get my presentations together for FOSDEM. I want to do a decent job with the presentations. But I also have a lot going on at work right now. So it's necessary.

But there's still development to do today. Yesterday I set the build running after having added /browser/components/sessionstore to the embedlite/moz.build file. I was hoping this would result in SessionStore.jsm and its dependencies being added to omni.ja.

The package built fine. But after installing it on my device, the new files weren't to be found: they didn't make it into the archive. Worse than that, they've not even made it into the obj-build-mer-qt-x folder on my laptop. That means they're still not getting included in the build.

It rather makes sense as well. The LOCAL_INCLUDES value should, if I'm understanding correctly, list places where C++ headers might be found. This should affect JavaScript files at all.

So I've spent the day digging around in the build system trying to figure out what needs to be changed to get them where they need to be. I'm certain there's an easy answer, but I just can't seem to figure it out.

I thought about trying to override SessionStore.jsm as a component, but since it doesn't actually seem to be a component itself, this didn't work either.

So after much flapping around, I've decided just to patch out the functionality from the SessionStoreFunctions.jsm file. That doesn't feel like the right way to do this, but until someone suggests a better way (which I'm all open to!) this should at least be a pretty simple fix.

Let's see.

I've built a new version of gecko-dev with the changes applied, installed them on my phone and it's now time to run them.

What's clear is that the SessionStore errors have now gone, which is great. But fixing those errors sadly hasn't fixed the Back and Forwards buttons.

Let's look at this other error then. It's caused by the last line in this code block:

So there are three potential reasons why this might be failing. First it could be that docShell no longer supports the WebNavigation interface. Second it could be that WebNavigation has changed so it no longer contains a sessionHitory value. Third it could be that the value is still there, but it's set to null.

From the nsDocShell class definition in nsDocShell.h it's clear that the interface is still supported:

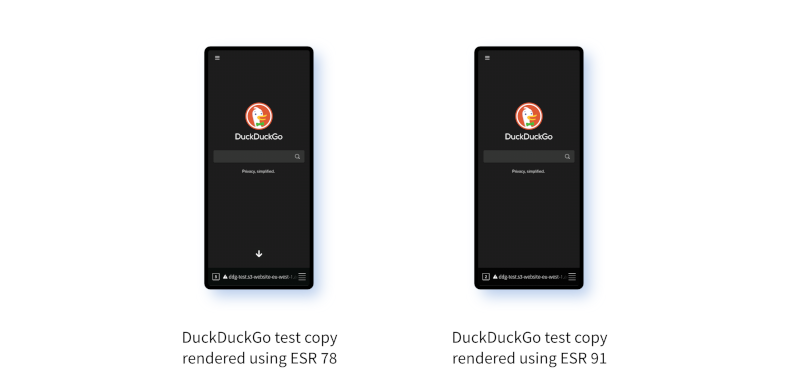

There are indeed some differences. In ESR 91 it's called in BrowsingContext::CreateFromIPC() and BrowsingContext::Attach() whereas in ESR 78 it's called in BrowsingContext::Attach(). Both versions also have it being called from BrowsingContext::DidSet().

I should put some breakpoints on those methods to see which, if any, is being called. And I should do it for both versions.

See here's the result for ESR 91:

Immediately on examining the backtrace it's clear something odd is happening. The callee is BrowsingContext::DidSet() which is the only location where the call is made in both ESR 78 and ESR 91. So that does rather beg the question of why it's not getting called in ESR 91.

Digging back through the backtrace further, it eventually materialises that the difference is happening in nsWebBrowser::Create(). There's this bit of code in ESR 78 that looks like this:

Placing a breakpoint on nsFrameLoader::TryRemoteBrowserInternal() shows that it's not being called on ESR 91.

The interesting thing is that it appears that if EnsureRemoteBrowser() gets called in this bit of code:

From here, things pan out. The EnsureRemoteBrowser() method is called by all of these methods:

However this isn't true in ESR 78 where the constructor does get called. It's almost certainly worthwhile finding out about this difference. But unfortunately I'm out of time for today.

I have to wrap things up. As I mentioned previously, I'm taking a break for two weeks to give myself a bit more breathing space as I prepare for FOSDEM, which I'm really looking forward to. If you're travelling to Brussels yourself then I hope to see you there. You'll be able to find me mostly on the Linux on Mobile stand.

When I get back I'll be back to posting these dev diaries again. And as a note to myself, when I do, my first action must be to figure out why nsFrameLoader is being created in ESR 78 but not ESR 91. That might hold the key to why the sessionHistory isn't getting called in ESR 91. And as a last resort, I can always revert commit 140a4164598e0c9ed53.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

So there will be no dev diary tomorrow or until the 8th February, at which point I'll start up again. Ostensibly the reason is so that I can get my presentations together for FOSDEM. I want to do a decent job with the presentations. But I also have a lot going on at work right now. So it's necessary.

But there's still development to do today. Yesterday I set the build running after having added /browser/components/sessionstore to the embedlite/moz.build file. I was hoping this would result in SessionStore.jsm and its dependencies being added to omni.ja.

The package built fine. But after installing it on my device, the new files weren't to be found: they didn't make it into the archive. Worse than that, they've not even made it into the obj-build-mer-qt-x folder on my laptop. That means they're still not getting included in the build.

It rather makes sense as well. The LOCAL_INCLUDES value should, if I'm understanding correctly, list places where C++ headers might be found. This should affect JavaScript files at all.

So I've spent the day digging around in the build system trying to figure out what needs to be changed to get them where they need to be. I'm certain there's an easy answer, but I just can't seem to figure it out.

I thought about trying to override SessionStore.jsm as a component, but since it doesn't actually seem to be a component itself, this didn't work either.

So after much flapping around, I've decided just to patch out the functionality from the SessionStoreFunctions.jsm file. That doesn't feel like the right way to do this, but until someone suggests a better way (which I'm all open to!) this should at least be a pretty simple fix.

Let's see.

I've built a new version of gecko-dev with the changes applied, installed them on my phone and it's now time to run them.

$ sailfish-browser

[D] unknown:0 - Using Wayland-EGL

library "libGLESv2_adreno.so" not found

library "eglSubDriverAndroid.so" not found

greHome from GRE_HOME:/usr/bin

libxul.so is not found, in /usr/bin/libxul.so

Created LOG for EmbedLiteTrace

[D] onCompleted:105 - ViewPlaceholder requires a SilicaFlickable parent

Created LOG for EmbedLite

Created LOG for EmbedPrefs

Created LOG for EmbedLiteLayerManager

JavaScript error: chrome://embedlite/content/embedhelper.js, line 259:

TypeError: sessionHistory is null

Call EmbedLiteApp::StopChildThread()

Redirecting call to abort() to mozalloc_abort

The log output is encouragingly quiet. There is one error stating that sessionHistory is null. I think this is unrelated to the SessionStore changes I've made, but it's still worth looking into this to fix it. Maybe this will be what fixes the Back and Forwards buttons?What's clear is that the SessionStore errors have now gone, which is great. But fixing those errors sadly hasn't fixed the Back and Forwards buttons.

Let's look at this other error then. It's caused by the last line in this code block:

case "embedui:addhistory": {

// aMessage.data contains: 1) list of 'links' loaded from DB, 2) current

// 'index'.

let docShell = content.docShell;

let sessionHistory = docShell.QueryInterface(Ci.nsIWebNavigation).

sessionHistory;

let legacyHistory = sessionHistory.legacySHistory;

The penultimate line is essentially saying "View the docShell object as a WebNavigation object and access the sessionHistory value stored inside it".So there are three potential reasons why this might be failing. First it could be that docShell no longer supports the WebNavigation interface. Second it could be that WebNavigation has changed so it no longer contains a sessionHitory value. Third it could be that the value is still there, but it's set to null.

From the nsDocShell class definition in nsDocShell.h it's clear that the interface is still supported:

class nsDocShell final : public nsDocLoader,

public nsIDocShell,

public nsIWebNavigation,

public nsIBaseWindow,

public nsIRefreshURI,

public nsIWebProgressListener,

public nsIWebPageDescriptor,

public nsIAuthPromptProvider,

public nsILoadContext,

public nsINetworkInterceptController,

public nsIDeprecationWarner,

public mozilla::SupportsWeakPtr {

So let's check that WebNavigation interface, defined in the nsIWebNavigation.idl file. The field is still there in the interface definition:

/** * The session history object used by this web navigation instance. This * object will be a mozilla::dom::ChildSHistory object, but is returned as * nsISupports so it can be called from JS code. */ [binaryname(SessionHistoryXPCOM)] readonly attribute nsISupports sessionHistory;Although the interface is being accessed from nsDocShell, when we look at the code we can see that the history itself is coming from elsewhere:

mozilla::dom::ChildSHistory* GetSessionHistory() {

return mBrowsingContext->GetChildSessionHistory();

}

[...]

RefPtr<mozilla::dom::BrowsingContext> mBrowsingContext;

This provides us with an opportunity, because it means we can place a breakpoint here to see what it's doing.

$ gdb sailfish-browser

[...]

(gdb) b nsDocShell::GetSessionHistory

Breakpoint 1 at 0x7fbc7b37c4: nsDocShell::GetSessionHistory. (10 locations)

(gdb) b BrowsingContext::GetChildSessionHistory

Breakpoint 2 at 0x7fbc7b376c: file docshell/base/BrowsingContext.cpp, line 3314.

(gdb) c

Thread 10 "GeckoWorkerThre" hit Breakpoint 1, nsDocShell::GetSessionHistory

(this=0x7f80aa9280)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/RefPtr.h:313

313 ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/RefPtr.h:

No such file or directory.

(gdb) c

Continuing.

Thread 10 "GeckoWorkerThre" hit Breakpoint 2, mozilla::dom::BrowsingContext::

GetChildSessionHistory (this=0x7f80c58e90)

at docshell/base/BrowsingContext.cpp:3314

3314 ChildSHistory* BrowsingContext::GetChildSessionHistory() {

(gdb) b mChildSessionHistory

Function "mChildSessionHistory" not defined.

Make breakpoint pending on future shared library load? (y or [n]) n

(gdb) p mChildSessionHistory

$1 = {mRawPtr = 0x0}

(gdb)

So this value is unset, but we'd expect it to be set as a consequence of a call to CreateChildSHistory():

void BrowsingContext::CreateChildSHistory() {

MOZ_ASSERT(IsTop());

MOZ_ASSERT(GetHasSessionHistory());

MOZ_DIAGNOSTIC_ASSERT(!mChildSessionHistory);

// Because session history is global in a browsing context tree, every process

// that has access to a browsing context tree needs access to its session

// history. That is why we create the ChildSHistory object in every process

// where we have access to this browsing context (which is the top one).

mChildSessionHistory = new ChildSHistory(this);

// If the top browsing context (this one) is loaded in this process then we

// also create the session history implementation for the child process.

// This can be removed once session history is stored exclusively in the

// parent process.

mChildSessionHistory->SetIsInProcess(IsInProcess());

}

As I'm looking through this code in ESR 91 and ESR 78 I notice that the above method has changed: the call to SetIsInProcess() is new. I wonder if that will ultimately be related to why this isn't being set? I'm thinking that the location where the creation happens may be different.There are indeed some differences. In ESR 91 it's called in BrowsingContext::CreateFromIPC() and BrowsingContext::Attach() whereas in ESR 78 it's called in BrowsingContext::Attach(). Both versions also have it being called from BrowsingContext::DidSet().

I should put some breakpoints on those methods to see which, if any, is being called. And I should do it for both versions.

See here's the result for ESR 91:

$ gdb sailfish-browser [...] (gdb) b BrowsingContext::CreateChildSHistory Function "BrowsingContext::CreateChildSHistory" not defined. Make breakpoint pending on future shared library load? (y or [n]) y Breakpoint 1 (BrowsingContext::CreateChildSHistory) pending. (gdb) r [...]The breakpoint is never hit and the creation never occurs. In contrast, on ESR 78 we get a hit before the first page has loaded:

$ gdb sailfish-browser

[...]

(gdb) b BrowsingContext::CreateChildSHistory

Function "BrowsingContext::CreateChildSHistory" not defined.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 1 (BrowsingContext::CreateChildSHistory) pending.

(gdb) r

[...]

Thread 8 "GeckoWorkerThre" hit Breakpoint 1, mozilla::dom::BrowsingContext::

CreateChildSHistory (this=this@entry=0x7f889dc120)

at obj-build-mer-qt-xr/dist/include/mozilla/cxxalloc.h:33

33 obj-build-mer-qt-xr/dist/include/mozilla/cxxalloc.h:

No such file or directory.

(gdb) bt

#0 mozilla::dom::BrowsingContext::CreateChildSHistory

(this=this@entry=0x7f889dc120)

at obj-build-mer-qt-xr/dist/include/mozilla/cxxalloc.h:33

#1 0x0000007fbc7c933c in mozilla::dom::BrowsingContext::DidSet

(aOldValue=<optimized out>, this=0x7f889dc120)

at docshell/base/BrowsingContext.cpp:2356

#2 mozilla::dom::syncedcontext::Transaction<mozilla::dom::BrowsingContext>::

Apply(mozilla::dom::BrowsingContext*)::{lambda(auto:1)#1}::operator()

<std::integral_constant<unsigned long, 37ul> >(std::integral_constant

<unsigned long, 37ul>) const (this=<optimized out>, this=<optimized out>,

idx=...)

at obj-build-mer-qt-xr/dist/include/mozilla/dom/SyncedContextInlines.h:137

[...]

#8 0x0000007fbc7cb484 in mozilla::dom::BrowsingContext::SetHasSessionHistory

<bool> (this=this@entry=0x7f889dc120,

aValue=aValue@entry=@0x7fa6e1da57: true)

at obj-build-mer-qt-xr/dist/include/mozilla/OperatorNewExtensions.h:47

#9 0x0000007fbc7cb54c in mozilla::dom::BrowsingContext::InitSessionHistory

(this=0x7f889dc120)

at docshell/base/BrowsingContext.cpp:2316

#10 0x0000007fbc7cb590 in mozilla::dom::BrowsingContext::InitSessionHistory

(this=this@entry=0x7f889dc120)

at obj-build-mer-qt-xr/dist/include/mozilla/dom/BrowsingContext.h:161

#11 0x0000007fbc901fac in nsWebBrowser::Create (aContainerWindow=

<optimized out>, aParentWidget=<optimized out>,

aBrowsingContext=aBrowsingContext@entry=0x7f889dc120,

aInitialWindowChild=aInitialWindowChild@entry=0x0)

at toolkit/components/browser/nsWebBrowser.cpp:158

#12 0x0000007fbca950e8 in mozilla::embedlite::EmbedLiteViewChild::

InitGeckoWindow (this=0x7f88bca840, parentId=0, parentBrowsingContext=0x0,

isPrivateWindow=<optimized out>, isDesktopMode=false)

at obj-build-mer-qt-xr/dist/include/nsCOMPtr.h:847

[...]

#33 0x0000007fb735e89c in ?? () from /lib64/libc.so.6

(gdb) c

Continuing.

[...]

This could well be our smoking gun. I need to check back through the backtrace to understand the process happening on ESR 78 and then establish why something similar isn't happening on ESR 91. Progress!Immediately on examining the backtrace it's clear something odd is happening. The callee is BrowsingContext::DidSet() which is the only location where the call is made in both ESR 78 and ESR 91. So that does rather beg the question of why it's not getting called in ESR 91.

Digging back through the backtrace further, it eventually materialises that the difference is happening in nsWebBrowser::Create(). There's this bit of code in ESR 78 that looks like this:

// If the webbrowser is a content docshell item then we won't hear any

// events from subframes. To solve that we install our own chrome event

// handler that always gets called (even for subframes) for any bubbling

// event.

if (aBrowsingContext->IsTop()) {

aBrowsingContext->InitSessionHistory();

}

NS_ENSURE_SUCCESS(docShellAsWin->Create(), nullptr);

docShellTreeOwner->AddToWatcher(); // evil twin of Remove in SetDocShell(0)

docShellTreeOwner->AddChromeListeners();

You can see the InitSessionHistory() call in there which eventually leads to the creation of our sessinHistory object. In ESR 91 that same bit of code looks like this:

// If the webbrowser is a content docshell item then we won't hear any

// events from subframes. To solve that we install our own chrome event

// handler that always gets called (even for subframes) for any bubbling

// event.

nsresult rv = docShell->InitWindow(nullptr, docShellParentWidget, 0, 0, 0, 0);

if (NS_WARN_IF(NS_FAILED(rv))) {

return nullptr;

}

docShellTreeOwner->AddToWatcher(); // evil twin of Remove in SetDocShell(0)

docShellTreeOwner->AddChromeListeners();

Where has the InitSessionHistory() gone? It should be possible to find out using a bit of git log searching. Here we're following the rule of using git blame to find out about lines that have been added and git log -S to find out about lines that have been removed.

$ git log -1 -S InitSessionHistory toolkit/components/browser/nsWebBrowser.cpp

commit 140a4164598e0c9ed537a377cf66ef668a7fbc25

Author: Randell Jesup <rjesup@wgate.com>

Date: Mon Feb 1 22:57:12 2021 +0000

Bug 1673617 - Create BrowsingContext::mChildSessionHistory more

aggressively, r=nika

Differential Revision: https://phabricator.services.mozilla.com/D100348

Just looking at this change, it removes the call to InitSessionHistory() in nsWebBrowser::Create() and moves it to nsFrameLoader::TryRemoteBrowserInternal. There are some related changes in the parent Bug 1673617, but looking through those it doesn't seem that they're anything we need to worry about.Placing a breakpoint on nsFrameLoader::TryRemoteBrowserInternal() shows that it's not being called on ESR 91.

The interesting thing is that it appears that if EnsureRemoteBrowser() gets called in this bit of code:

BrowsingContext* nsFrameLoader::GetBrowsingContext() {

if (mNotifyingCrash) {

if (mPendingBrowsingContext && mPendingBrowsingContext->EverAttached()) {

return mPendingBrowsingContext;

}

return nullptr;

}

if (IsRemoteFrame()) {

Unused << EnsureRemoteBrowser();

} else if (mOwnerContent) {

Unused << MaybeCreateDocShell();

}

return GetExtantBrowsingContext();

}

If the first path in the second condition is followed then the InitSessionHistory() would get called too. If this gets called by the InitSessionHistory() doesn't, it would imply that IsRemoteFrame() must be false. But if it is false then MaybeCreateDocShell() could get called, which also has a call to InitSessionHistory() like this:

// If we are an in-process browser, we want to set up our session history.

if (mIsTopLevelContent && mOwnerContent->IsXULElement(nsGkAtoms::browser) &&

!mOwnerContent->HasAttr(kNameSpaceID_None, nsGkAtoms::disablehistory)) {

// XXX(nika): Set this up more explicitly?

mPendingBrowsingContext->InitSessionHistory();

}

I wonder what's going on around about this code then. Checking with the debugger the answer turns out to be that apparently, nsFrameLoader::GetExtantBrowsingContext() simply doesn't get called either.From here, things pan out. The EnsureRemoteBrowser() method is called by all of these methods:

- nsFrameLoader::GetBrowsingContext()

- nsFrameLoader::ShowRemoteFrame()

- nsFrameLoader::ReallyStartLoadingInternal()

However this isn't true in ESR 78 where the constructor does get called. It's almost certainly worthwhile finding out about this difference. But unfortunately I'm out of time for today.

I have to wrap things up. As I mentioned previously, I'm taking a break for two weeks to give myself a bit more breathing space as I prepare for FOSDEM, which I'm really looking forward to. If you're travelling to Brussels yourself then I hope to see you there. You'll be able to find me mostly on the Linux on Mobile stand.

When I get back I'll be back to posting these dev diaries again. And as a note to myself, when I do, my first action must be to figure out why nsFrameLoader is being created in ESR 78 but not ESR 91. That might hold the key to why the sessionHistory isn't getting called in ESR 91. And as a last resort, I can always revert commit 140a4164598e0c9ed53.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

24 Jan 2024 : Day 148 #

After much digging around in the code and gecko project structure I eventually decided that the best thing to do is implement a Sailfish-specific version of the SessionStore.jsm module.

Unfortunately this isn't just a case of copying the file over to embedlite-components, because it has some dependencies. These are listed at the top of the file. Let's go through and figure out which ones are already available, which we can remove, which we can copy over directly to embedlite-components and which we have to reimplement ourselves.

Here's the code that relates to the dependencies:

In addition to the above there's also the SessionStore.jsm file itself.

As you can see there's still a fair bit of uncertainty in the table. But also, quite a large number of the dependencies are already available.

From the code it looks like the functionality is around saving and restoring sessions, including tab data, cookies, window positions and the like. Some of this isn't relevant on Sailfish OS (there's no point saving and restoring window sizes) or is already handled by other parts of the system (cookie storage). In fact, it's not clear that this module is providing any additional functionality that sailfish-browser actually needs.

Given this my focus will be on creating a minimal implementation that doesn't error when called but performs very little functionality in practice. That will hopefully make the task tractable.

It's early in the morning here still, but time for me to start work; so I'll pick this up again tonight.

[...]

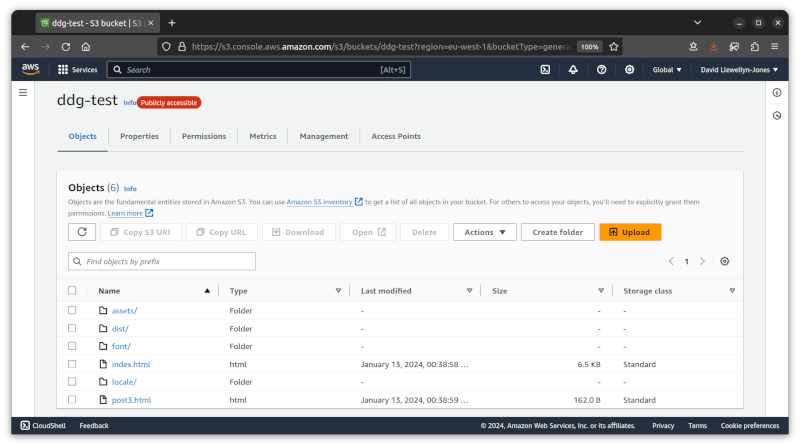

It's now late evening and I have just a bit of time to move some files around. I've started by copying the SessionStore.jsm file into the embedlite-components project, alongside the other files I think I can copy without making changes. Apart from SessionStore.jsm, I've tried to copy over only files that don't require dependencies, or where the dependencies are all available.

The final step is to hook them up into the system so that they get included and can be accessed by other parts of the code. And this is where I hit a problem. The embedlite-components package contains two types of JavaScript entity. The first are in the jscomps folder. These all seem to be components that have a defined interface (they satisfy a "contract") as specified in the EmbedLiteJSComponents.manifest file. Here's an example of the entry for the AboutRedirector component:

So I'm going to need to find some other way to do this. As it's late, it will take me a while to come up with an alternative. But my immediate thought is that maybe I can just add the missing files in to the EmbedLite build process. It looks like this is being controlled by the embedlite/moz.build file. So I've added the component folder /browser/components/sessionstore into the list of directories there:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

Unfortunately this isn't just a case of copying the file over to embedlite-components, because it has some dependencies. These are listed at the top of the file. Let's go through and figure out which ones are already available, which we can remove, which we can copy over directly to embedlite-components and which we have to reimplement ourselves.

Here's the code that relates to the dependencies:

const { PrivateBrowsingUtils } = ChromeUtils.import(

"resource://gre/modules/PrivateBrowsingUtils.jsm"

);

const { Services } = ChromeUtils.import("resource://gre/modules/Services.jsm");

const { TelemetryTimestamps } = ChromeUtils.import(

"resource://gre/modules/TelemetryTimestamps.jsm"

);

const { XPCOMUtils } = ChromeUtils.import(

"resource://gre/modules/XPCOMUtils.jsm"

);

ChromeUtils.defineModuleGetter(

this,

"SessionHistory",

"resource://gre/modules/sessionstore/SessionHistory.jsm"

);

XPCOMUtils.defineLazyServiceGetters(this, {

gScreenManager: ["@mozilla.org/gfx/screenmanager;1", "nsIScreenManager"],

});

XPCOMUtils.defineLazyModuleGetters(this, {

AppConstants: "resource://gre/modules/AppConstants.jsm",

AsyncShutdown: "resource://gre/modules/AsyncShutdown.jsm",

BrowserWindowTracker: "resource:///modules/BrowserWindowTracker.jsm",

DevToolsShim: "chrome://devtools-startup/content/DevToolsShim.jsm",

E10SUtils: "resource://gre/modules/E10SUtils.jsm",

GlobalState: "resource:///modules/sessionstore/GlobalState.jsm",

HomePage: "resource:///modules/HomePage.jsm",

PrivacyFilter: "resource://gre/modules/sessionstore/PrivacyFilter.jsm",

PromiseUtils: "resource://gre/modules/PromiseUtils.jsm",

RunState: "resource:///modules/sessionstore/RunState.jsm",

SessionCookies: "resource:///modules/sessionstore/SessionCookies.jsm",

SessionFile: "resource:///modules/sessionstore/SessionFile.jsm",

SessionSaver: "resource:///modules/sessionstore/SessionSaver.jsm",

SessionStartup: "resource:///modules/sessionstore/SessionStartup.jsm",

TabAttributes: "resource:///modules/sessionstore/TabAttributes.jsm",

TabCrashHandler: "resource:///modules/ContentCrashHandlers.jsm",

TabState: "resource:///modules/sessionstore/TabState.jsm",

TabStateCache: "resource:///modules/sessionstore/TabStateCache.jsm",

TabStateFlusher: "resource:///modules/sessionstore/TabStateFlusher.jsm",

setTimeout: "resource://gre/modules/Timer.jsm",

});

Heres's a table to summarise. I've ordered them by their current status to help highlight what needs work and what type of work it is.| Module | Variable | Status |

|---|---|---|

| gre/modules/PrivateBrowsingUtils.jsm" | PrivateBrowsingUtils | Available |

| gre/modules/Services.jsm | Services | Available |

| gre/modules/TelemetryTimestamps.jsm | TelemetryTimestamps | Available |

| gre/modules/XPCOMUtils.jsm | XPCOMUtils | Available |

| gre/modules/sessionstore/SessionHistory.jsm | SessionHistory | Available |

| gre/modules/AppConstants.jsm | AppConstants | Available |

| gre/modules/AsyncShutdown.jsm | AsyncShutdown | Available |

| gre/modules/E10SUtils.jsm | E10SUtils | Available |

| gre/modules/sessionstore/PrivacyFilter.jsm | PrivacyFilter | Available |

| gre/modules/PromiseUtils.jsm | PromiseUtils | Available |

| gre/modules/Timer.jsm | setTimeout | Available |

| @mozilla.org/gfx/screenmanager;1 | gScreenManager | Drop |

| modules/ContentCrashHandlers.jsm | TabCrashHandler | Drop |

| devtools-startup/content/DevToolsShim.jsm | DevToolsShim | Drop |

| modules/sessionstore/TabAttributes.jsm | TabAttributes | Copy |

| modules/sessionstore/GlobalState.jsm | GlobalState | Copy |

| modules/sessionstore/RunState.jsm | RunState | Copy |

| modules/BrowserWindowTracker.jsm | BrowserWindowTracker | Drop |

| modules/sessionstore/SessionCookies.jsm | SessionCookies | Drop? |

| modules/HomePage.jsm | HomePage | Drop? |

| modules/sessionstore/SessionFile.jsm | SessionFile | Copy/drop? |

| modules/sessionstore/SessionSaver.jsm | SessionSaver | Copy/drop? |

| modules/sessionstore/SessionStartup.jsm | SessionStartup | Copy/drop? |

| modules/sessionstore/TabState.jsm | TabState | Copy/drop? |

| modules/sessionstore/TabStateCache.jsm | TabStateCache | Copy/drop? |

| modules/sessionstore/TabStateFlusher.jsm | TabStateFlusher | Copy/drop? |

In addition to the above there's also the SessionStore.jsm file itself.

As you can see there's still a fair bit of uncertainty in the table. But also, quite a large number of the dependencies are already available.

From the code it looks like the functionality is around saving and restoring sessions, including tab data, cookies, window positions and the like. Some of this isn't relevant on Sailfish OS (there's no point saving and restoring window sizes) or is already handled by other parts of the system (cookie storage). In fact, it's not clear that this module is providing any additional functionality that sailfish-browser actually needs.

Given this my focus will be on creating a minimal implementation that doesn't error when called but performs very little functionality in practice. That will hopefully make the task tractable.

It's early in the morning here still, but time for me to start work; so I'll pick this up again tonight.

[...]

It's now late evening and I have just a bit of time to move some files around. I've started by copying the SessionStore.jsm file into the embedlite-components project, alongside the other files I think I can copy without making changes. Apart from SessionStore.jsm, I've tried to copy over only files that don't require dependencies, or where the dependencies are all available.

$ find . -iname "SessionStore.jsm"

./gecko-dev/browser/components/sessionstore/SessionStore.jsm

$ cp ./gecko-dev/browser/components/sessionstore/SessionStore.jsm \

../../embedlite-components/jscomps/

$ cp ./gecko-dev/browser/components/sessionstore/TabAttributes.jsm \

../../embedlite-components/jscomps/

$ cp ./gecko-dev/browser/components/sessionstore/GlobalState.jsm \

../../embedlite-components/jscomps/

$ cp ./gecko-dev/browser/components/sessionstore/RunState.jsm \

../../embedlite-components/jscomps/

I've also been through and removed all of the code that used any of the dropped dependency. And in fact I've gone ahead and dropped all of the modules marked as "Drop" or "Copy/drop" in the table above. Despite the quantity of code in the original files, it really doesn't look like there's much functionality that's needed for sailfish-browser in these scripts. But having the functions available may turn out to be useful at some point in the future and in the meantime if the module just provides methods that don't do anything, then they will at least be successful in suppressing the errors.The final step is to hook them up into the system so that they get included and can be accessed by other parts of the code. And this is where I hit a problem. The embedlite-components package contains two types of JavaScript entity. The first are in the jscomps folder. These all seem to be components that have a defined interface (they satisfy a "contract") as specified in the EmbedLiteJSComponents.manifest file. Here's an example of the entry for the AboutRedirector component:

# AboutRedirector.js

component {59f3da9a-6c88-11e2-b875-33d1bd379849} AboutRedirector.js

contract @mozilla.org/network/protocol/about;1?what= {59f3da9a-6c88-11e2-b875-33d1bd379849}

contract @mozilla.org/network/protocol/about;1?what=embedlite {59f3da9a-6c88-11e2-b875-33d1bd379849}

contract @mozilla.org/network/protocol/about;1?what=certerror {59f3da9a-6c88-11e2-b875-33d1bd379849}

contract @mozilla.org/network/protocol/about;1?what=home {59f3da9a-6c88-11e2-b875-33d1bd379849}

Our SessionStore.jsm files can't be added like this because they're not components with defined interfaces in this way. The other type are in the jsscipts folder. These aren't components and the files would fit perfectly in there. But they are all accessed using a particular path and the chrome:// scheme, like this:

const { NetErrorHelper } = ChromeUtils.import("chrome://embedlite/content/NetErrorHelper.jsm")

This won't work for SessionStore.jsm, which is expected to be accessed like this:

XPCOMUtils.defineLazyModuleGetters(this, {

SessionStore: "resource:///modules/sessionstore/SessionStore.jsm",

});

Different location; different approach.So I'm going to need to find some other way to do this. As it's late, it will take me a while to come up with an alternative. But my immediate thought is that maybe I can just add the missing files in to the EmbedLite build process. It looks like this is being controlled by the embedlite/moz.build file. So I've added the component folder /browser/components/sessionstore into the list of directories there:

LOCAL_INCLUDES += [

'!/build',

'/browser/components/sessionstore',

'/dom/base',

'/dom/ipc',

'/gfx/layers',

'/gfx/layers/apz/util',

'/hal',

'/js/xpconnect/src',

'/netwerk/base/',

'/toolkit/components/resistfingerprinting',

'/toolkit/xre',

'/widget',

'/xpcom/base',

'/xpcom/build',

'/xpcom/threads',

'embedhelpers',

'embedprocess',

'embedshared',

'embedthread',

'modules',

'utils',

]

I've added the directory, cleaned out the build directory and started off a fresh build to run overnight. Let's see whether that worked in the morning.If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

23 Jan 2024 : Day 147 #

Last night I woke up in a mild panic. This happens sometimes, usually when I have a lot going on and I feel like I'm in danger of dropping the ball.

This seems to be my mind's (or maybe my body's?) way of telling me that I need to get my priorities straight. That there's something that I need to get done, resolved or somehow dealt with, and I need to do it urgently or I'll continue to have sleepless nights until I do.

The reason for this particular panic was my FOSDEM preparations, combined with a build up of projects at work that are coming to a head. For FOSDEM I have two talks to prepare (one about this blog, the other related to my work), the Linux on Mobile stand to help organise, the Sailfish community dinner on the Saturday to help organise, support for the HPC, Big Data & Data Science devroom on the Saturday, and also with the Python devroom on the Sunday. It's going to be a crazy busy event. But it's actually the run-up to it and the fact I've to still write my presentations, that's losing me sleep.

It's all fine: it's under control. But in order to prevent it spiralling out of control, I'm going to be taking a break from gecko development for a couple of weeks until things have calmed down. This will slow down development, which of course saddens me because more than anything else I just want ESR 91 to end up in a good, releasable, state. But as others wiser than I am have already cautioned, this also means keeping a positive and healthy state of mind.

I'll finish my last post on Day 149 (that's this Thursday). I'll start back up again on Thursday the 8th February, assuming all goes to plan!

But for the next couple of days there's still development to be done, so let's get straight back to it.

Today I'm still attempting to fix the NS_ERROR_FILE_NOT_FOUND error coming from SessionStore.jsm which I believe may be causing the Back and Forwards buttons in the browser to fail. It's become clear that the SessionStore.jsm file itself is missing (along with a bunch of other files listed in gecko-dev/browser/components/sessionstore/moz.build) but what's not clear is whether the problem is that the files should be there, or that the calls to the methods in this file shouldn't be there.

Overnight while lying in bed I came up with some kind of plan to help move things forwards. The call to the failing method is happening in updateSessionStoreForWindow() and this is only called by the exported method UpdateSessionStoreForWindow(). As far as I can tell this isn't executed by any JavaScript code, but because it has an IPDL interface it's possible for it to be called by C++ code as well.

And sure enough, it's being called twice in WindowGlobalParent.cpp. Once at the end of the WriteFormDataAndScrollToSessionStore() method on line 1260, like this:

The debugger will place it on the first line it can after the point you request. Because both of the cases I'm interested in are right at the end of the methods they're called in, when I attempt to put a breakpoint on the exact line the debugger places it instead in the next method along in the source code. That isn't much use for what I needed.

Hence I've placed them a little earlier in the code instead: on the first lines where they actually stick.

You'll notice from the output that there's another — earlier — NS_ERROR_FILE_NOT_FOUND error before the breakpoint hits. This is coming from a different spot: line 120 of SessionStoreFunctions.jsm rather than the line 105 we were looking at here.

This new error is called from line 54 of the same file (we can see from from the backtrace in the log output) which is in the UpdateSessionStoreForStorage() method in the file. So where is this being called from?

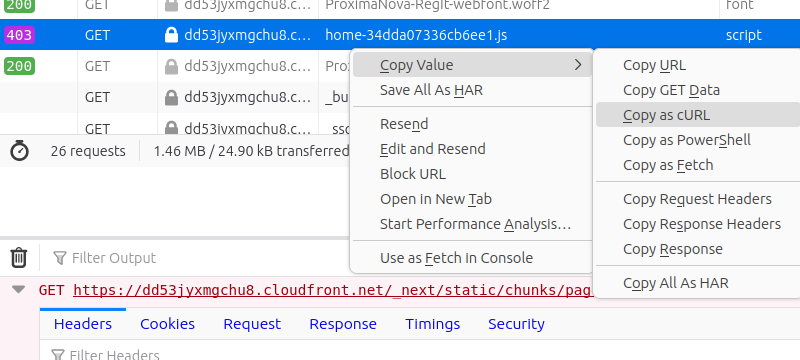

This is all good stuff. Looking through the ESR 78 code there doesn't appear to be anything equivalent in CanonicalBrowsingContext.cpp. But now that I know this is where the problem is happening, I can at least find the commit that introduced the changes and use that to find out more. Here's the output from git blame, but please forgive the terrible formatting: it's very hard to line-wrap this output cleanly.

It seems to me that, in essence, the gecko-dev/browser/components/ directory where the missing files can be found contains modules that relate to the browser chrome and Firefox user interface, rather than the rendering engine. Typically this kind of content would be replicated on Sailfish OS by adding amended versions into the embedlite-components project. If it's browser-specific material, that would make sense.

As an example, in Firefox we can find AboutRedirector.h and AboutRedirector.cpp files , whereas on Sailfish OS there's a replacement AboutRedirectory.js file in embedlite-components. Similarly Firefox has a DownloadsManager.jsm file that can be found in gecko-dev/browser/components/newtab/lib/. This seems to be replaced by EmbedliteDownloadManager.js in embedlite-components. Both have similar functionality based on the names of the methods contained in them, but the implementations are quite different.

Assuming this is correct, probably the right way to tackle the missing SessionStore.jsm and related files would be to move copies into embedlite-components. They'll need potentially quite big changes to align them with sailfish-browser, although hopefully this will largely be removing functionality that has already been implemented elsewhere (for example cookie save and restore).

I think I'll give these changes a go tomorrow.

Another thing I've pretty-much concluded while looking through this code is that it looks like it probably has nothing to do with the issue that's blocking the Back and Forward buttons from working after all. So I'll also need to make a separate task to track down the real source of that problem.

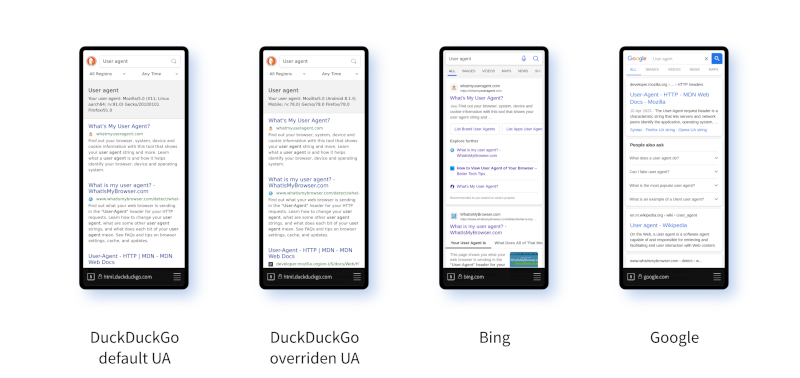

Right now I have a stack of tasks: SessionStore; Back/Forward failures; DuckDuckGo rendering. I mustn't lose site of the fact that the real goal right now is to get DuckDuckGo rendering correctly. The other tasks are secondary, albeit with DuckDuckGo rendering potentially dependent on them.

That's it for today. More tomorrow and Thursday, but then a bit of a break.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

This seems to be my mind's (or maybe my body's?) way of telling me that I need to get my priorities straight. That there's something that I need to get done, resolved or somehow dealt with, and I need to do it urgently or I'll continue to have sleepless nights until I do.

The reason for this particular panic was my FOSDEM preparations, combined with a build up of projects at work that are coming to a head. For FOSDEM I have two talks to prepare (one about this blog, the other related to my work), the Linux on Mobile stand to help organise, the Sailfish community dinner on the Saturday to help organise, support for the HPC, Big Data & Data Science devroom on the Saturday, and also with the Python devroom on the Sunday. It's going to be a crazy busy event. But it's actually the run-up to it and the fact I've to still write my presentations, that's losing me sleep.

It's all fine: it's under control. But in order to prevent it spiralling out of control, I'm going to be taking a break from gecko development for a couple of weeks until things have calmed down. This will slow down development, which of course saddens me because more than anything else I just want ESR 91 to end up in a good, releasable, state. But as others wiser than I am have already cautioned, this also means keeping a positive and healthy state of mind.

I'll finish my last post on Day 149 (that's this Thursday). I'll start back up again on Thursday the 8th February, assuming all goes to plan!

But for the next couple of days there's still development to be done, so let's get straight back to it.

Today I'm still attempting to fix the NS_ERROR_FILE_NOT_FOUND error coming from SessionStore.jsm which I believe may be causing the Back and Forwards buttons in the browser to fail. It's become clear that the SessionStore.jsm file itself is missing (along with a bunch of other files listed in gecko-dev/browser/components/sessionstore/moz.build) but what's not clear is whether the problem is that the files should be there, or that the calls to the methods in this file shouldn't be there.

Overnight while lying in bed I came up with some kind of plan to help move things forwards. The call to the failing method is happening in updateSessionStoreForWindow() and this is only called by the exported method UpdateSessionStoreForWindow(). As far as I can tell this isn't executed by any JavaScript code, but because it has an IPDL interface it's possible for it to be called by C++ code as well.

And sure enough, it's being called twice in WindowGlobalParent.cpp. Once at the end of the WriteFormDataAndScrollToSessionStore() method on line 1260, like this:

nsresult WindowGlobalParent::WriteFormDataAndScrollToSessionStore(

const Maybe<FormData>& aFormData, const Maybe<nsPoint>& aScrollPosition,

uint32_t aEpoch) {

[...]

return funcs->UpdateSessionStoreForWindow(GetRootOwnerElement(), context, key,

aEpoch, update);

}

And another time at the end of the ResetSessionStore() method on line 1310, like this:

nsresult WindowGlobalParent::ResetSessionStore(uint32_t aEpoch) {

[...]

return funcs->UpdateSessionStoreForWindow(GetRootOwnerElement(), context, key,

aEpoch, update);

}

I've placed a breakpoint on these two locations to find out whether these are actually where it's being fired from. If you're being super-observant you'll notice I've not actually placed the breakpoints where I actually want them; I've had to place them earlier in the code (but crucially, still within the same methods). That's because it's not always possible to place breakpoints on the exact line you want.The debugger will place it on the first line it can after the point you request. Because both of the cases I'm interested in are right at the end of the methods they're called in, when I attempt to put a breakpoint on the exact line the debugger places it instead in the next method along in the source code. That isn't much use for what I needed.

Hence I've placed them a little earlier in the code instead: on the first lines where they actually stick.

bash-5.0$ EMBED_CONSOLE=1 MOZ_LOG="EmbedLite:5" gdb sailfish-browser

(gdb) b WindowGlobalParent.cpp:1260

Breakpoint 5 at 0x7fbbc8e31c: file dom/ipc/WindowGlobalParent.cpp, line 1260.

(gdb) b WindowGlobalParent.cpp:1294

Breakpoint 9 at 0x7fbbc8e688: file dom/ipc/WindowGlobalParent.cpp, line 1294.

(gdb) r

[...]

JavaScript error: resource:///modules/sessionstore/SessionStore.jsm, line 541:

NS_ERROR_FILE_NOT_FOUND:

CONSOLE message:

[JavaScript Error: "NS_ERROR_FILE_NOT_FOUND: " {file:

"resource:///modules/sessionstore/SessionStore.jsm" line: 541}]

@resource:///modules/sessionstore/SessionStore.jsm:541:3

SSF_updateSessionStoreForWindow@resource://gre/modules/

SessionStoreFunctions.jsm:120:5

UpdateSessionStoreForStorage@resource://gre/modules/

SessionStoreFunctions.jsm:54:35

Thread 10 "GeckoWorkerThre" hit Breakpoint 5, mozilla::dom::WindowGlobalParent::

WriteFormDataAndScrollToSessionStore (this=this@entry=0x7f81164520,

aFormData=..., aScrollPosition=..., aEpoch=0)

at dom/ipc/WindowGlobalParent.cpp:1260

1260 windowState.mHasChildren.Construct() = !context->Children().IsEmpty();

(gdb) n

709 ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/Span.h:

No such file or directory.

(gdb)

1260 windowState.mHasChildren.Construct() = !context->Children().IsEmpty();

(gdb)

1262 JS::RootedValue update(jsapi.cx());

(gdb)

1263 if (!ToJSValue(jsapi.cx(), windowState, &update)) {

(gdb)

1267 JS::RootedValue key(jsapi.cx(), context->Top()->PermanentKey());

(gdb)

1269 return funcs->UpdateSessionStoreForWindow(GetRootOwnerElement(),

context, key,

(gdb) n

1297 ${PROJECT}/obj-build-mer-qt-xr/dist/include/js/RootingAPI.h:

No such file or directory.

(gdb)

JavaScript error: resource:///modules/sessionstore/SessionStore.jsm, line 541:

NS_ERROR_FILE_NOT_FOUND:

1267 JS::RootedValue key(jsapi.cx(), context->Top()->PermanentKey());

(gdb) c

Continuing.

It's clear from the above that the WriteFormDataAndScrollToSessionStore() method is being called and is then going on to call the missing JavaScript method. We can even see the error coming from the JavaScript as we step out of the calling method.You'll notice from the output that there's another — earlier — NS_ERROR_FILE_NOT_FOUND error before the breakpoint hits. This is coming from a different spot: line 120 of SessionStoreFunctions.jsm rather than the line 105 we were looking at here.

This new error is called from line 54 of the same file (we can see from from the backtrace in the log output) which is in the UpdateSessionStoreForStorage() method in the file. So where is this being called from?

$ grep -rIn "UpdateSessionStoreForStorage" * --include="*.js" \

--include="*.jsm" --include="*.cpp" --exclude-dir="obj-build-mer-qt-xr"

gecko-dev/docshell/base/CanonicalBrowsingContext.cpp:2233:

return funcs->UpdateSessionStoreForStorage(Top()->GetEmbedderElement(), this,

gecko-dev/docshell/base/CanonicalBrowsingContext.cpp:2255:

void CanonicalBrowsingContext::UpdateSessionStoreForStorage(

gecko-dev/dom/storage/SessionStorageManager.cpp:854:

CanonicalBrowsingContext::UpdateSessionStoreForStorage(

gecko-dev/toolkit/components/sessionstore/SessionStoreFunctions.jsm:47:

function UpdateSessionStoreForStorage(

gecko-dev/toolkit/components/sessionstore/SessionStoreFunctions.jsm:66:

"UpdateSessionStoreForStorage",

From this we can see it's being called in a few places, all of them from C++ code. Again, that means we can explore them with gdb using breakpoints. Let's give this a go as well.

(gdb) break CanonicalBrowsingContext.cpp:2213

Breakpoint 7 at 0x7fbc7c6abc: file docshell/base/CanonicalBrowsingContext.cpp,

line 2213.

(gdb) r

[...]

Thread 10 "GeckoWorkerThre" hit Breakpoint 7, mozilla::dom::

CanonicalBrowsingContext::WriteSessionStorageToSessionStore

(this=0x7f80b6dee0, aSesssionStorage=..., aEpoch=0)

at docshell/base/CanonicalBrowsingContext.cpp:2213

2213 AutoJSAPI jsapi;

(gdb) bt

#0 mozilla::dom::CanonicalBrowsingContext::WriteSessionStorageToSessionStore

(this=0x7f80b6dee0, aSesssionStorage=..., aEpoch=0)

at docshell/base/CanonicalBrowsingContext.cpp:2213

#1 0x0000007fbc7c6f54 in mozilla::dom::CanonicalBrowsingContext::

<lambda(const mozilla::MozPromise<nsTArray<mozilla::dom::SSCacheCopy>,

mozilla::ipc::ResponseRejectReason, true>::ResolveOrRejectValue&)>::

operator() (valueList=..., __closure=0x7f80bd70c8)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/Variant.h:768

#2 mozilla::MozPromise<nsTArray<mozilla::dom::SSCacheCopy>, mozilla::ipc::

ResponseRejectReason, true>::InvokeMethod<mozilla::dom::

CanonicalBrowsingContext::UpdateSessionStoreSessionStorage(const

std::function<void()>&)::<lambda(const mozilla::MozPromise<nsTArray

<mozilla::dom::SSCacheCopy>, mozilla::ipc::ResponseRejectReason, true>::

ResolveOrRejectValue&)>, void (mozilla::dom::CanonicalBrowsingContext::

UpdateSessionStoreSessionStorage(const std::function<void()>&)::

<lambda(const mozilla::MozPromise<nsTArray<mozilla::dom::SSCacheCopy>,

mozilla::ipc::ResponseRejectReason, true>::ResolveOrRejectValue&)>::*)

(const mozilla::MozPromise<nsTArray<mozilla::dom::SSCacheCopy>,

mozilla::ipc::ResponseRejectReason, true>::ResolveOrRejectValue&) const,

mozilla::MozPromise<nsTArray<mozilla::dom::SSCacheCopy>, mozilla::ipc::

ResponseRejectReason, true>::ResolveOrRejectValue>

(aValue=..., aMethod=<optimized out>,

[...]

#25 0x0000007fb78b289c in ?? () from /lib64/libc.so.6

(gdb) n

[LWP 8594 exited]

2214 if (!jsapi.Init(wrapped->GetJSObjectGlobal())) {

(gdb)

2218 JS::RootedValue key(jsapi.cx(), Top()->PermanentKey());

(gdb)

2220 Record<nsCString, Record<nsString, nsString>> storage;

(gdb)

2221 JS::RootedValue update(jsapi.cx());

(gdb)

2223 if (!aSesssionStorage.IsEmpty()) {

(gdb)

2230 update.setNull();

(gdb)

2233 return funcs->UpdateSessionStoreForStorage(Top()->

GetEmbedderElement(), this,

(gdb)

1297 ${PROJECT}/obj-build-mer-qt-xr/dist/include/js/RootingAPI.h:

No such file or directory.

(gdb)

JavaScript error: resource:///modules/sessionstore/SessionStore.jsm, line 541:

NS_ERROR_FILE_NOT_FOUND:

2221 JS::RootedValue update(jsapi.cx());

(gdb)

2220 Record<nsCString, Record<nsString, nsString>> storage;

(gdb)

This new breakpoint is hit and once again, stepping through the code shows the problem method being called and also triggering the JavaScript error we're concerned about.This is all good stuff. Looking through the ESR 78 code there doesn't appear to be anything equivalent in CanonicalBrowsingContext.cpp. But now that I know this is where the problem is happening, I can at least find the commit that introduced the changes and use that to find out more. Here's the output from git blame, but please forgive the terrible formatting: it's very hard to line-wrap this output cleanly.

$ git blame docshell/base/CanonicalBrowsingContext.cpp \

-L :WriteSessionStorageToSessionStore

dd51467 (Andreas Farre 2021-05-26 2204) nsresult CanonicalBrowsingContext::

WriteSessionStorageToSessionStore(

dd51467 (Andreas Farre 2021-05-26 2205) const nsTArray<SSCacheCopy>&

aSesssionStorage, uint32_t aEpoch) {

dd51467 (Andreas Farre 2021-05-26 2206) nsCOMPtr<nsISessionStoreFunctions> funcs =

dd51467 (Andreas Farre 2021-05-26 2207) do_ImportModule("resource://gre/

modules/SessionStoreFunctions.jsm");

dd51467 (Andreas Farre 2021-05-26 2208) if (!funcs) {

dd51467 (Andreas Farre 2021-05-26 2209) return NS_ERROR_FAILURE;

dd51467 (Andreas Farre 2021-05-26 2210) }

dd51467 (Andreas Farre 2021-05-26 2211)

dd51467 (Andreas Farre 2021-05-26 2212) nsCOMPtr<nsIXPConnectWrappedJS>

wrapped = do_QueryInterface(funcs);

dd51467 (Andreas Farre 2021-05-26 2213) AutoJSAPI jsapi;

dd51467 (Andreas Farre 2021-05-26 2214) if (!jsapi.Init(wrapped->

GetJSObjectGlobal())) {

dd51467 (Andreas Farre 2021-05-26 2215) return NS_ERROR_FAILURE;

dd51467 (Andreas Farre 2021-05-26 2216) }

dd51467 (Andreas Farre 2021-05-26 2217)

2b70b9d (Kashav Madan 2021-06-26 2218) JS::RootedValue key(jsapi.cx(),

Top()->PermanentKey());

2b70b9d (Kashav Madan 2021-06-26 2219)

dd51467 (Andreas Farre 2021-05-26 2220) Record<nsCString, Record<nsString,

nsString>> storage;

dd51467 (Andreas Farre 2021-05-26 2221) JS::RootedValue update(jsapi.cx());

dd51467 (Andreas Farre 2021-05-26 2222)

dd51467 (Andreas Farre 2021-05-26 2223) if (!aSesssionStorage.IsEmpty()) {

dd51467 (Andreas Farre 2021-05-26 2224) SessionStoreUtils::

ConstructSessionStorageValues(this,

aSesssionStorage,

dd51467 (Andreas Farre 2021-05-26 2225) storage);

dd51467 (Andreas Farre 2021-05-26 2226) if (!ToJSValue(jsapi.cx(), storage,

&update)) {

dd51467 (Andreas Farre 2021-05-26 2227) return NS_ERROR_FAILURE;

dd51467 (Andreas Farre 2021-05-26 2228) }

dd51467 (Andreas Farre 2021-05-26 2229) } else {

dd51467 (Andreas Farre 2021-05-26 2230) update.setNull();

dd51467 (Andreas Farre 2021-05-26 2231) }

dd51467 (Andreas Farre 2021-05-26 2232)

2b70b9d (Kashav Madan 2021-06-26 2233) return funcs->

UpdateSessionStoreForStorage(

Top()->GetEmbedderElement(), this,

2b70b9d (Kashav Madan 2021-06-26 2234) key, aEpoch, update);

dd51467 (Andreas Farre 2021-05-26 2235) }

dd51467 (Andreas Farre 2021-05-26 2236)

If you can get past the terrible formatting you should be able to see there are two commits of interest here. The first is dd51467c228cb from Andreas Farre and the second which was layered on top is 2b70b9d821c8e from Kashav Madan. Let's find out more about them both.

$ git log -1 dd51467c228cb

commit dd51467c228cb5c9ec9d9efbb6e0339037ec7fd5

Author: Andreas Farre <farre@mozilla.com>

Date: Wed May 26 07:14:06 2021 +0000

Part 7: Bug 1700623 - Make session storage session store work with Fission.

r=nika

Use the newly added session storage data getter to access the session

storage in the parent and store it in session store without a round

trip to content processes.

Depends on D111433

Differential Revision: https://phabricator.services.mozilla.com/D111434

For some reason the Phabricator link doesn't work for me, but we can still see the revision directly in the repository.$ git log -1 2b70b9d821c8e commit 2b70b9d821c8eaf0ecae987cfc57e354f0f9cc20 Author: Kashav Madan <kshvmdn@gmail.com> Date: Sat Jun 26 20:25:29 2021 +0000 Bug 1703692 - Store the latest embedder's permanent key on CanonicalBrowsingContext, r=nika,mccr8 And include it in Session Store flushes to avoid dropping updates in case the browser is unavailable. Differential Revision: https://phabricator.services.mozilla.com/D118385 There aren't many clues in these changes, in particular there's no hint of how the build system was changed to have these files included. However, digging around in this code has given me a better understanding of the structure and purpose of the different directories.

It seems to me that, in essence, the gecko-dev/browser/components/ directory where the missing files can be found contains modules that relate to the browser chrome and Firefox user interface, rather than the rendering engine. Typically this kind of content would be replicated on Sailfish OS by adding amended versions into the embedlite-components project. If it's browser-specific material, that would make sense.

As an example, in Firefox we can find AboutRedirector.h and AboutRedirector.cpp files , whereas on Sailfish OS there's a replacement AboutRedirectory.js file in embedlite-components. Similarly Firefox has a DownloadsManager.jsm file that can be found in gecko-dev/browser/components/newtab/lib/. This seems to be replaced by EmbedliteDownloadManager.js in embedlite-components. Both have similar functionality based on the names of the methods contained in them, but the implementations are quite different.

Assuming this is correct, probably the right way to tackle the missing SessionStore.jsm and related files would be to move copies into embedlite-components. They'll need potentially quite big changes to align them with sailfish-browser, although hopefully this will largely be removing functionality that has already been implemented elsewhere (for example cookie save and restore).

I think I'll give these changes a go tomorrow.

Another thing I've pretty-much concluded while looking through this code is that it looks like it probably has nothing to do with the issue that's blocking the Back and Forward buttons from working after all. So I'll also need to make a separate task to track down the real source of that problem.

Right now I have a stack of tasks: SessionStore; Back/Forward failures; DuckDuckGo rendering. I mustn't lose site of the fact that the real goal right now is to get DuckDuckGo rendering correctly. The other tasks are secondary, albeit with DuckDuckGo rendering potentially dependent on them.

That's it for today. More tomorrow and Thursday, but then a bit of a break.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

22 Jan 2024 : Day 146 #

I'm still in the process of fixing the Sec-Fetch-* headers. The data I collected yesterday resulted in a few conclusions:

I do note however also notice that DuckDuckGo doesn't load correctly for these cases, so presumably there's still a problem with the headers here. I'll have to come back to that.

The inability to use the Back or Forwards buttons is also beginning to cause me trouble in day-to-day use, so now might be the time to fix that as well. Once I have I can test the remaining cases.

My suspicion is that the reason they don't work is related to this error that appears periodically when using the browser:

A different possibility is that this, at the top of the SessionStoreFunctions.jsm file, is causing the problem:

A quick search inside the omni archive suggests this file is indeed missing:

But it's late now, so I'm going to have to figure that out tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

- Opening a URL at the command line with a tab that was already open gives odd results.

- Opening a URL as a homepage gives odd results.

- The Back and Forwards buttons are broken so couldn't be tested.

- I didn't get time to test the JavaScript case.

- Open a URL using JavaScript simulating an HREF selection.

- Open a URL using a JavaScript redirect.

<!DOCTYPE html>

<html>

<head>

<meta http-equiv="content-type" content="text/html; charset=UTF-8">

<meta name="viewport" content="width=device-width, user-scalable=no"/>

<script type="text/javascript">

function reloadHref(url) {

setTimeout(() => window.location.href = url, 2000);

}

function reloadRedirect(url) {

setTimeout(() => window.location.replace(url), 2000);

}

</script>

</head>

<body>

<p><a href="javascript:reloadHref('https://duckduckgo.com');">

Simulate an HREF selection

</a></p>

<p><a href="javascript:reloadRedirect('https://duckduckgo.com');">

Simulate a redirect

</a></p>

</body>

</html>

Pretty straightforward stuff, but should do the trick. This allows me to test the effects of the URL being changed and record the results. In fact, let's get straight to the results. Here's what I found out by testing using this page:| Situation | Expected | Flag set |

|---|---|---|

| Open a URL using JavaScript simulating a HREF selection. | 0 | 0 |

| Open a URL using a JavaScript redirect. | 0 | 0 |

I do note however also notice that DuckDuckGo doesn't load correctly for these cases, so presumably there's still a problem with the headers here. I'll have to come back to that.

The inability to use the Back or Forwards buttons is also beginning to cause me trouble in day-to-day use, so now might be the time to fix that as well. Once I have I can test the remaining cases.

My suspicion is that the reason they don't work is related to this error that appears periodically when using the browser:

JavaScript error: resource://gre/modules/SessionStoreFunctions.jsm, line 105: NS_ERROR_FILE_NOT_FOUND:Here's the code in the file that's generating this error:

SessionStore.updateSessionStoreFromTablistener(

aBrowser,

aBrowsingContext,

aPermanentKey,

{ data: { windowstatechange: aData }, epoch: aEpoch }

);

It could be an error happening inside SessionStore.updateSessionStoreFromTablistener() but two reasons make me think this is unlikely. First the error message clearly targets the calling location and if the error were inside this method I'd expect the error message to reflect that instead. Second there isn't anything obvious in the updateSessionStoreFromTablistener() body that might be causing an error like this. No obvious file accesses or anything like that.A different possibility is that this, at the top of the SessionStoreFunctions.jsm file, is causing the problem:

XPCOMUtils.defineLazyModuleGetters(this, {

SessionStore: "resource:///modules/sessionstore/SessionStore.jsm",

});

This is a lazy getter, meaning that an attempt will be made to load the resource only at the point where a method from the module is used. Could it be that the SessionStore.jsm file is inaccessible? Then when a method from it is called the JavaScript interpreter tries to load the code in and fails, triggering the error.A quick search inside the omni archive suggests this file is indeed missing:

$ find . -iname "SessionStore.jsm" $ find . -iname "SessionStoreFunctions.jsm" ./omni/modules/SessionStoreFunctions.jsm $ find . -iname "Services.jsm" ./omni/modules/Services.jsmAs we can see, in contrast the SessionStoreFunctions.jsm and Services.jsm files are both present and correct. Well, present at least. To test out the theory that this is the problem I've parachuted the file into omni. First from my laptop:

$ scp gecko-dev/browser/components/sessionstore/SessionStore.jsm \

defaultuser@10.0.0.116:./omni/modules/sessionstore/

SessionStore.jsm 100% 209KB 1.3MB/s 00:00

[...]

And then on my phone:

$ ./omni.sh pack Omni action: pack Packing from: ./omni Packing to: /usr/lib64/xulrunner-qt5-91.9.1This hasn't fixed the Back and Forwards buttons, but it has resulted in a new error. The fact that this is error is now coming from inside SessionStore.jsm is encouraging.

JavaScript error: chrome://embedlite/content/embedhelper.js, line 259:

TypeError: sessionHistory is null

JavaScript error: resource:///modules/sessionstore/SessionStore.jsm, line 541:

NS_ERROR_FILE_NOT_FOUND:

Line 541 of SessionStore.jsm looks like this:

_globalState: new GlobalState(),This also looks lazy-getter-related, since the only other reference to GlobalState() in this file is at the top, in this chunk of lazy-getter code:

XPCOMUtils.defineLazyModuleGetters(this, {

AppConstants: "resource://gre/modules/AppConstants.jsm",

AsyncShutdown: "resource://gre/modules/AsyncShutdown.jsm",

BrowserWindowTracker: "resource:///modules/BrowserWindowTracker.jsm",

DevToolsShim: "chrome://devtools-startup/content/DevToolsShim.jsm",

E10SUtils: "resource://gre/modules/E10SUtils.jsm",

GlobalState: "resource:///modules/sessionstore/GlobalState.jsm",

HomePage: "resource:///modules/HomePage.jsm",

PrivacyFilter: "resource://gre/modules/sessionstore/PrivacyFilter.jsm",

PromiseUtils: "resource://gre/modules/PromiseUtils.jsm",

RunState: "resource:///modules/sessionstore/RunState.jsm",

SessionCookies: "resource:///modules/sessionstore/SessionCookies.jsm",

SessionFile: "resource:///modules/sessionstore/SessionFile.jsm",

SessionSaver: "resource:///modules/sessionstore/SessionSaver.jsm",

SessionStartup: "resource:///modules/sessionstore/SessionStartup.jsm",

TabAttributes: "resource:///modules/sessionstore/TabAttributes.jsm",

TabCrashHandler: "resource:///modules/ContentCrashHandlers.jsm",

TabState: "resource:///modules/sessionstore/TabState.jsm",

TabStateCache: "resource:///modules/sessionstore/TabStateCache.jsm",

TabStateFlusher: "resource:///modules/sessionstore/TabStateFlusher.jsm",

setTimeout: "resource://gre/modules/Timer.jsm",

});

Sure enough, when I check, the GlobalState.jsm file is missing. It looks like these missing files are ones referenced in gecko-dev/browser/components/sessionstore/moz.build:

EXTRA_JS_MODULES.sessionstore = [

"ContentRestore.jsm",

"ContentSessionStore.jsm",

"GlobalState.jsm",

"RecentlyClosedTabsAndWindowsMenuUtils.jsm",

"RunState.jsm",

"SessionCookies.jsm",

"SessionFile.jsm",

"SessionMigration.jsm",

"SessionSaver.jsm",

"SessionStartup.jsm",

"SessionStore.jsm",

"SessionWorker.js",

"SessionWorker.jsm",

"StartupPerformance.jsm",

"TabAttributes.jsm",

"TabState.jsm",

"TabStateCache.jsm",

"TabStateFlusher.jsm",

]

It's not at all clear to me why these files aren't being included. The problem must be arising because either they're not being included when they should be, or they're being accessed when they shouldn't be.But it's late now, so I'm going to have to figure that out tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

21 Jan 2024 : Day 145 #

Yesterday was a light day of gecko development (heavy on everything else but light on gecko). I managed to update the user agent overrides but not a lot else.

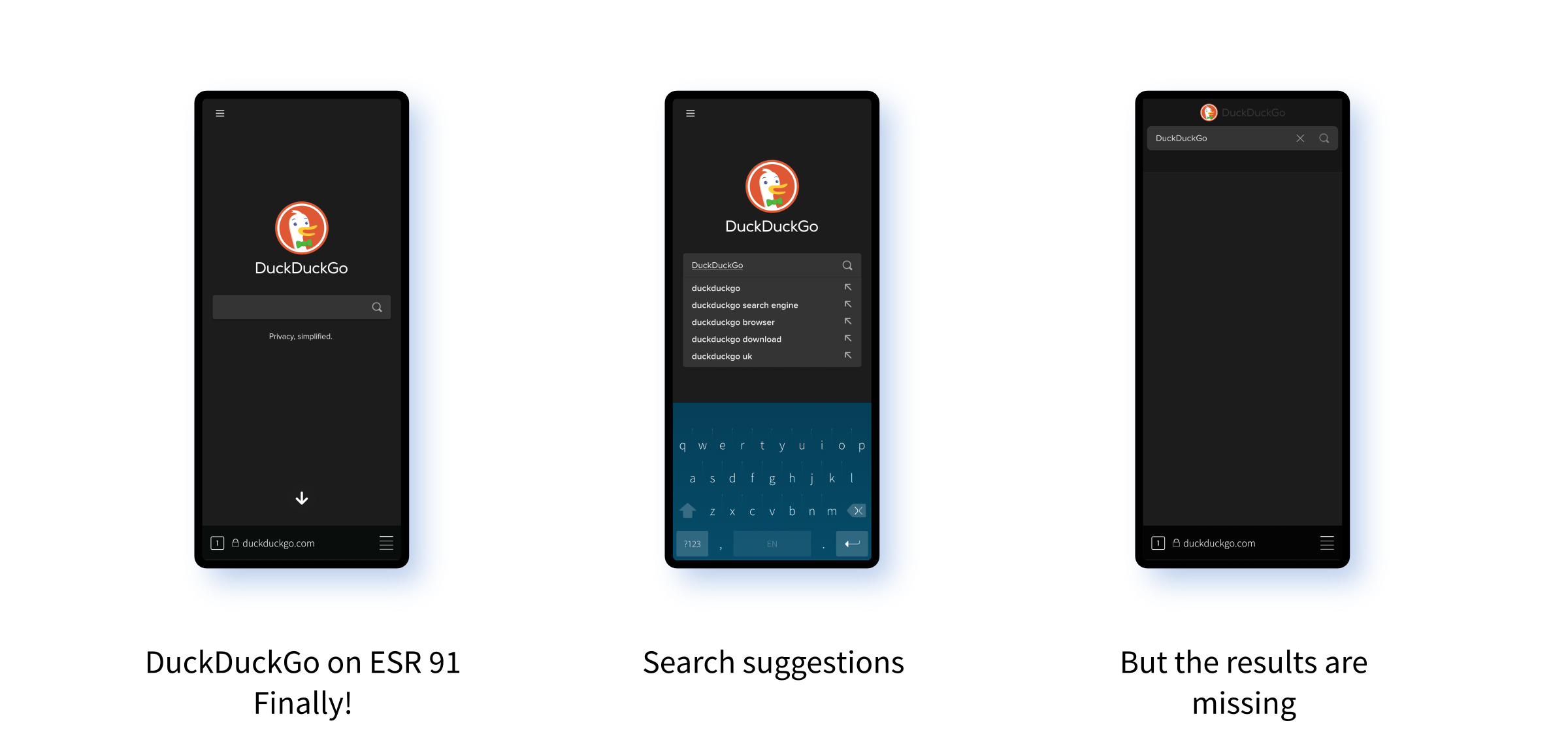

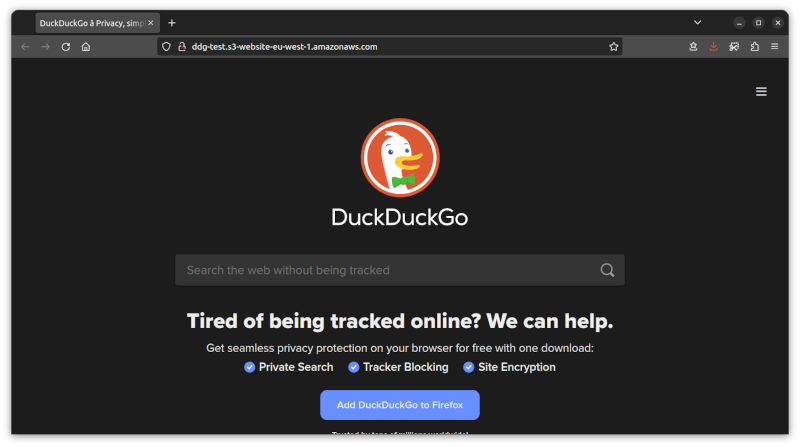

The one thing I did do was think about next steps, which brings us to today. To recap, the DuckDuckGo main page is now working. The search page inexplicably has no search results on it and so needs fixing. But the first thing I need to do is check whether the user interaction flags are propagating properly. My working assumption is that in the cases where they're needed they're being set. What I'm less certain about is whether they're not being set when they're not needed.

The purpose of the Sec-Fetch-* headers is to allow the browser to work in collaboration with the server. The user doesn't necessarily trust the page they're viewing and the server doesn't necessarily trust the browser. But the user should trust the browser. And the user should trust the browser to send the correct Sec-Fetch-* headers to the server. Assuming they're set correctly a trustworthy site can then act on them accordingly; for example, by only showing private data when the page isn't being displayed in an iframe, say.

Anyway, the point is, setting the value of these headers is a security feature. The implicit contract between user and browser requires that they're set correctly and the user trusts the browser will do this. The result of not doing so could make it easier for attackers to trick the user. So getting the flags set correctly is really important.

When it comes to understanding the header values and the flags that control them, the key gateway is EmbedLiteViewChild::RecvLoadURL(). The logic for deciding whether to set the flags happens before this is called and all of the logic that uses the flag happens after it. So I'll place a breakpoint on this method and check the value of the flag in various situations.

Which situations? Here are the ones I can think of where the flag should be set to true:

There are some notable entries in the table although broadly speaking the results are what I was hoping for. For example, when using D-Bus or xdg-open to open the same website that's already available, there is no effect. I hadn't expected this, but having now seen the behaviour in action, it makes perfect sense and looks correct. For the case of opening a URL via the command line with the same tab open, I'll need to look in to whether some other flag should be set instead; but on the face of it, this looks like something that may need fixing.

Similarly for opening a URL as the home page. I think the result is the reverse of what it should be, but I need to look into this more to check.

The forward and back button interactions are marked as "Unavailable". That's because the back and forward functionality are currently broken. I'm hoping that fixing Issue 1024 will also restore this functionality, after which I'll need to test this again.

Finally I didn't get time to test the JavaScript case. I'll have to do that tomorrow.

So a few things still to fix, but hopefully over the next couple of days these can all be ironed out.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

The one thing I did do was think about next steps, which brings us to today. To recap, the DuckDuckGo main page is now working. The search page inexplicably has no search results on it and so needs fixing. But the first thing I need to do is check whether the user interaction flags are propagating properly. My working assumption is that in the cases where they're needed they're being set. What I'm less certain about is whether they're not being set when they're not needed.

The purpose of the Sec-Fetch-* headers is to allow the browser to work in collaboration with the server. The user doesn't necessarily trust the page they're viewing and the server doesn't necessarily trust the browser. But the user should trust the browser. And the user should trust the browser to send the correct Sec-Fetch-* headers to the server. Assuming they're set correctly a trustworthy site can then act on them accordingly; for example, by only showing private data when the page isn't being displayed in an iframe, say.

Anyway, the point is, setting the value of these headers is a security feature. The implicit contract between user and browser requires that they're set correctly and the user trusts the browser will do this. The result of not doing so could make it easier for attackers to trick the user. So getting the flags set correctly is really important.

When it comes to understanding the header values and the flags that control them, the key gateway is EmbedLiteViewChild::RecvLoadURL(). The logic for deciding whether to set the flags happens before this is called and all of the logic that uses the flag happens after it. So I'll place a breakpoint on this method and check the value of the flag in various situations.

Which situations? Here are the ones I can think of where the flag should be set to true:

- Open a URL at the command line with no existing tabs.

- Open a URL at the command line with existing tabs.

- Open a URL via D-Bus with no existing tabs.

- Open a URL via D-Bus with existing tabs.

- Open a URL using xdg-open with no existing tags.

- Open a URL using xdg-open with existing tags.

- Open a URL as the homepage.

- Enter a URL in the address bar.

- Open an item from the history.

- Open a bookmark.

- Select a link on a page.

- Open a URL using JavaScript.

- Open a page using the Back button.

- Open a page using the Forwards button.

- Reloading a page.

$ gdb sailfish-browser

(gdb) b EmbedLiteViewChild::RecvLoadURL

Function "EmbedLiteViewChild::RecvLoadURL" not defined.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 1 (EmbedLiteViewChild::RecvLoadURL) pending.

(gdb) r https://duckduckgo.com

Thread 8 "GeckoWorkerThre" hit Breakpoint 1, mozilla::embedlite::

EmbedLiteViewChild::RecvLoadURL (this=0x7f88ad1c60, url=...,

aFromExternal=@0x7f9f3d3598: true) at mobile/sailfishos/embedshared/

EmbedLiteViewChild.cpp:482

482 {

(gdb) p aFromExternal

$2 = (const bool &) @0x7f9f3d3598: true

(gdb) n

483 LOGT("url:%s", NS_ConvertUTF16toUTF8(url).get());

(gdb) n

867 ${PROJECT}/obj-build-mer-qt-xr/dist/include/nsCOMPtr.h:

No such file or directory.

(gdb) n

487 if (Preferences::GetBool("keyword.enabled", true)) {

(gdb) n

493 if (aFromExternal) {

(gdb) n

497 LoadURIOptions loadURIOptions;

(gdb) n

498 loadURIOptions.mTriggeringPrincipal = nsContentUtils::GetSystemPrincipal();

(gdb) p /x flags

$3 = 0x341000

(gdb) p/x flags & nsIWebNavigation::LOAD_FLAGS_FIXUP_SCHEME_TYPOS

$6 = 0x200000

(gdb) p/x flags & nsIWebNavigation::LOAD_FLAGS_ALLOW_THIRD_PARTY_FIXUP

$7 = 0x100000

(gdb) p/x flags & nsIWebNavigation::LOAD_FLAGS_DISALLOW_INHERIT_PRINCIPAL

$8 = 0x40000

(gdb) p/x flags & nsIWebNavigation::LOAD_FLAGS_FROM_EXTERNAL

$9 = 0x1000

(gdb) p /x flags - (nsIWebNavigation::LOAD_FLAGS_ALLOW_THIRD_PARTY_FIXUP

| nsIWebNavigation::LOAD_FLAGS_FIXUP_SCHEME_TYPOS

| nsIWebNavigation::LOAD_FLAGS_DISALLOW_INHERIT_PRINCIPAL

| nsIWebNavigation::LOAD_FLAGS_FROM_EXTERNAL)

$10 = 0x0

(gdb)

In a couple of places (selecting links and reloading the page) the EmbedLiteViewChild::RecvLoadURL() method doesn't get called. For those cases I put a breakpoint on LoadInfo::GetLoadTriggeredFromExternal() instead. The process looks a little different:

(gdb) disable break

(gdb) break GetLoadTriggeredFromExternal

Breakpoint 2 at 0x7fb9d3430c: GetLoadTriggeredFromExternal. (2 locations)

(gdb) c

Continuing.

Thread 8 "GeckoWorkerThre" hit Breakpoint 2, mozilla::net::LoadInfo::

GetLoadTriggeredFromExternal (this=0x7f89170cf0,

aLoadTriggeredFromExternal=0x7f9f3d3150) at netwerk/base/LoadInfo.cpp:1478

1478 *aLoadTriggeredFromExternal = mLoadTriggeredFromExternal;

(gdb) p mLoadTriggeredFromExternal

$1 = false

(gdb)

I've been through and checked the majority of the cases separately. Here's a summary of the results once I apply these processes for all of the cases.| Situation | Expected | Flag set |

|---|---|---|

| Open a URL at the command line with no existing tabs. | 1 | 1 |

| Open a URL at the command line with the same tab open. | 1 | 0 |

| Open a URL at the command line with a different tab open. | 1 | 1 |

| Open a URL via D-Bus with no existing tabs. | 1 | 1 |

| Open a URL via D-Bus with the same tab open. | 1 | No effect |

| Open a URL via D-Bus with a different tab open. | 1 | 1 |

| Open a URL using xdg-open with no existing tags. | 1 | 1 |

| Open a URL using xdg-open with the same tab open. | 1 | No effect |

| Open a URL using xdg-open with a different tab open. | 1 | 1 |

| Open a URL as the homepage. | 0 | 1 |

| Enter a URL in the address bar. | 0 | 0 |

| Open an item from the history. | 0 | 0 |

| Open a bookmark. | 0 | 0 |

| Select a link on a page. | 0 | 0 |

| Open a URL using JavaScript. | 0 | Not tested |

| Open a page using the Back button. | 0 | Unavailable |

| Open a page using the Forwards button. | 0 | Unavailable |

| Reloading a page. | 0 | 0 |

There are some notable entries in the table although broadly speaking the results are what I was hoping for. For example, when using D-Bus or xdg-open to open the same website that's already available, there is no effect. I hadn't expected this, but having now seen the behaviour in action, it makes perfect sense and looks correct. For the case of opening a URL via the command line with the same tab open, I'll need to look in to whether some other flag should be set instead; but on the face of it, this looks like something that may need fixing.

Similarly for opening a URL as the home page. I think the result is the reverse of what it should be, but I need to look into this more to check.

The forward and back button interactions are marked as "Unavailable". That's because the back and forward functionality are currently broken. I'm hoping that fixing Issue 1024 will also restore this functionality, after which I'll need to test this again.

Finally I didn't get time to test the JavaScript case. I'll have to do that tomorrow.

So a few things still to fix, but hopefully over the next couple of days these can all be ironed out.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

20 Jan 2024 : Day 144 #